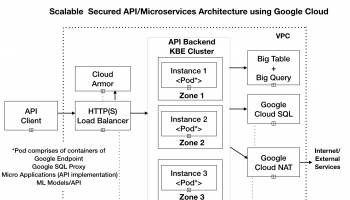

In this article, we would be deploying a set of microservices (as containers) on Google Kubernetes Cluster. We would use Google Endpoints for API management and deploy the google endpoint container along with our microservices container.

Containers are becoming a standard way to run and scale microservices across multiple cloud providers. With Kubernetes, the job of deployment, scaling, and management of containerized applications on cloud or on promises is now mainstream and extremely streamlined.

To build a production grade environment, however, you need a host of other components like Virtual Private Network (VPN), endpoint management for microservices, load balancer to balance request over various protocols (HTTP, HTTP(s), Web socket), Configuring SSL, Health monitoring of services, Network configuration like Whitelisting of IPs, Network address translation (NAT) for Outbound connections, and ensuring logging at various entry points in your application.

In this blog, I will go through the high-level steps to create a production ready environment on Google cloud for deploying microservices. The steps outlined are generic and can be applied to build your production topology on similar lines.

We would further create an Ingress Controller (of type Load Balancer) and expose our application microservices over HTTPS. All incoming HTTPS requests would go to a Load balancer, which would direct them to one of the nodes in the Kubernetes Cluster. In the nodes, the requests would first go to Google endpoint (which would validate the endpoint key and logs all endpoint request) and then to the respective microservice endpoints.

Environment and Solution Overview

We would be deploying a set of microservices (as containers) on Google Kubernetes Cluster. We would use Google Endpoints for API management and deploy the google endpoint container along with our microservices container.

We would further create an Ingress Controller (of type Load Balancer) and expose our application microservices over HTTPS. All incoming HTTPS requests would go to a Load balancer, which would direct them to one of the nodes in the Kubernetes Cluster. In the nodes, the requests would first go to Google endpoint (which would validate the endpoint key and logs all endpoint request) and then to the respective microservice endpoints.

There are additional requirements on ensuring only authorized IPs access our microservices. We will learn how to whitelist the IPs using two approaches – Google Cloud Armor and Nginx Ingress Controller (instead of the default Google Ingress Controller).

Similarly, for outbound connections, we would be connecting to third-party services. The third-party services employ similar IP whitelisting requirements, and we’ll need to provide our set of outbound IPs that would connect to these third-party services. For this requirement, we would be use Google Cloud NAT to provide our private Google Kubernetes Engine (GKE) clusters the ability to connect to the Internet, as well as Static outbound IPs that we can configure and provide to third-party services to whitelist on their servers.

There are additional requirements on ensuring only authorized IPs access our microservices. We will learn how to whitelist the IPs using two approaches – Google Cloud Armor and Nginx Ingress Controller (instead of the default Google Ingress Controller).

Similarly, for outbound connections, we would be connecting to third-party services. The third-party services employ similar IP whitelisting requirements, and we’ll need to provide our set of outbound IPs that would connect to these third-party services. For this requirement, we would be use Google Cloud NAT to provide our private Google Kubernetes Engine (GKE) clusters the ability to connect to the Internet, as well as Static outbound IPs that we can configure and provide to third-party services to whitelist on their servers.

High-Level Steps

The following are the high-level steps that we would carry out to build and deploy our microservices configuration. It is assumed that the Google project is already created.

Solution 1 – Using Google Ingress Controller

- Create a VPN

- Create a private instance of Google Kubernetes Cluster.

- Create Cloud NAT configuration.

- Download the microservice application and deployment scripts from GitHub.

- Build the microservice container.

- Push the microservice container to the google container registry.

- Deploy endpoint for the project.

- Create Workload, Service, and Ingress (GCE ingress).

- Invoke the microservice.

- Configure Cloud Armor.

- Test the microservices with Cloud Armor.

Solution 2 – Using Nginx Ingress Controller

The Solution 1 above uses default Google Ingress Controller. We can also use Nginx Ingress Controller as it provides a lot of add-on features like IP whitelisting, rule configuration, HTTP(s) redirect etc. The deployment process is the same as described in Solution 1, except for Point 7 and Point 8. For Point 7, we would install Nginx ingress first on our Kubernetes Cluster and then deploy the Nginx Ingress configuration for our application (instead of GCE ingress). We don’t need Cloud Armor as the whitelisting of IPs is supported through Nginx Ingress directly.