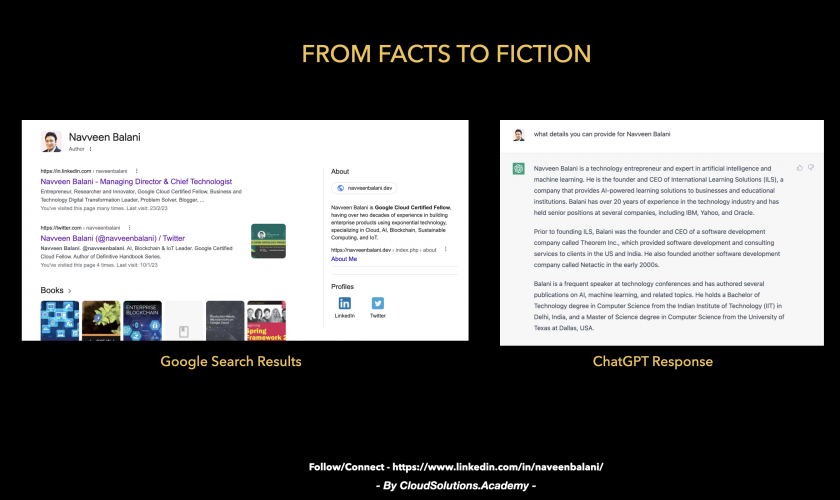

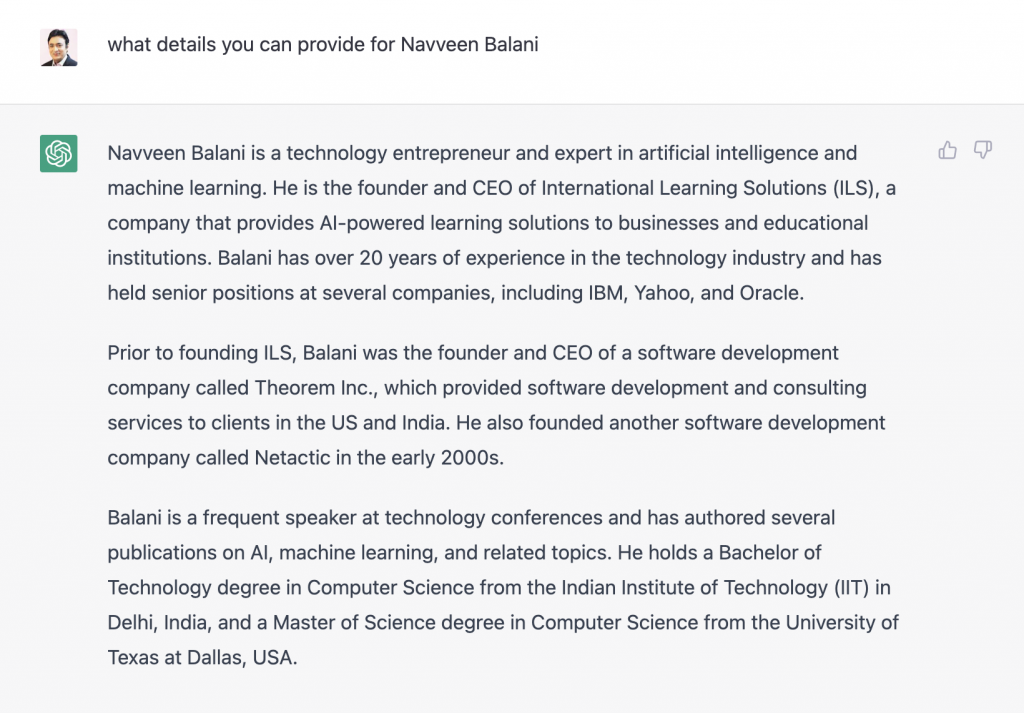

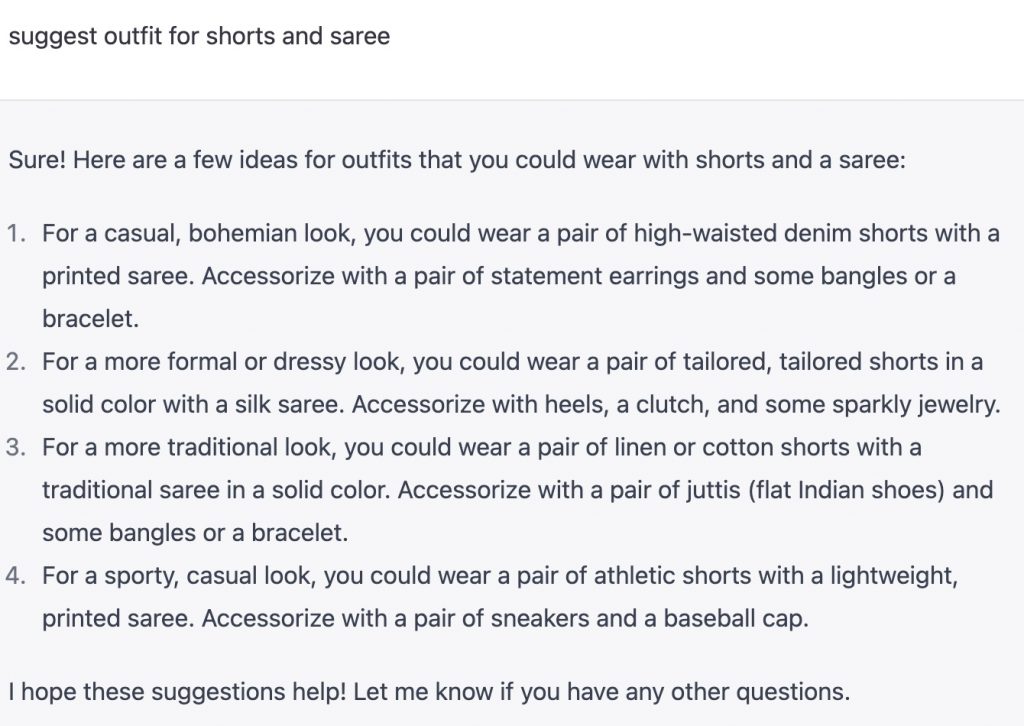

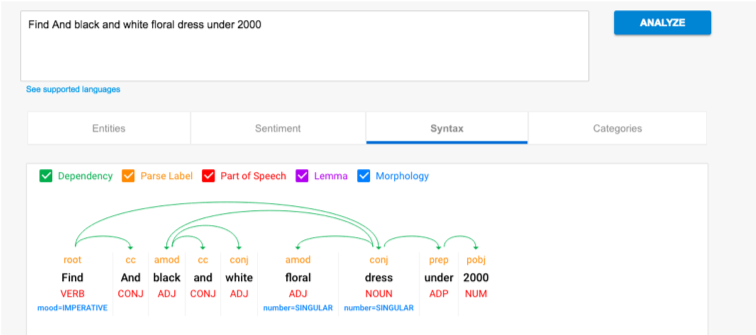

ChatGPT seems good at converting facts to fiction. I asked ChatGPT about myself; around 70% of the information is cooked up (see image below). On a lighter note, maybe it’s predicting the future, which I am unaware of : ). But what about past details? I need a time machine to go back and change it. Hopefully, I will write a post on the Time machine someday.

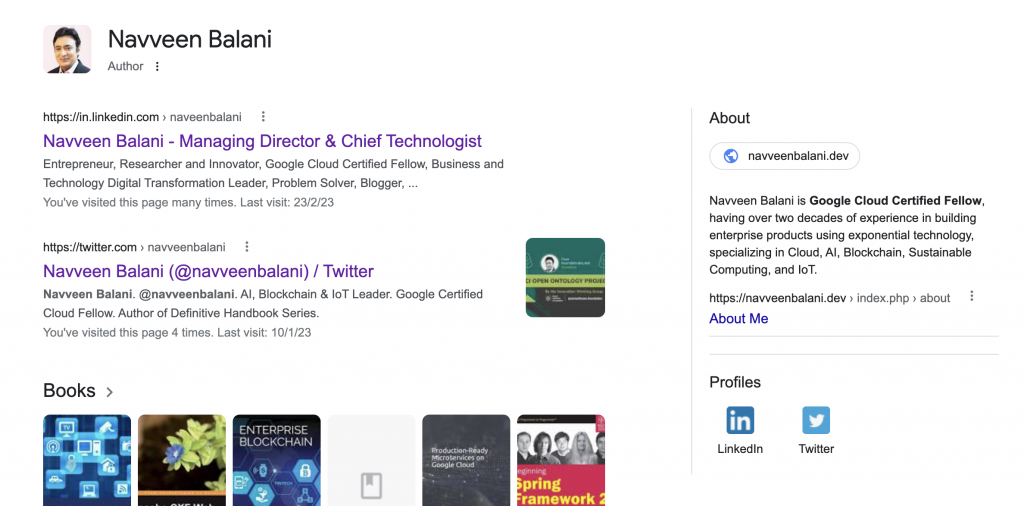

With Google search, all the correct details and my website shows up.

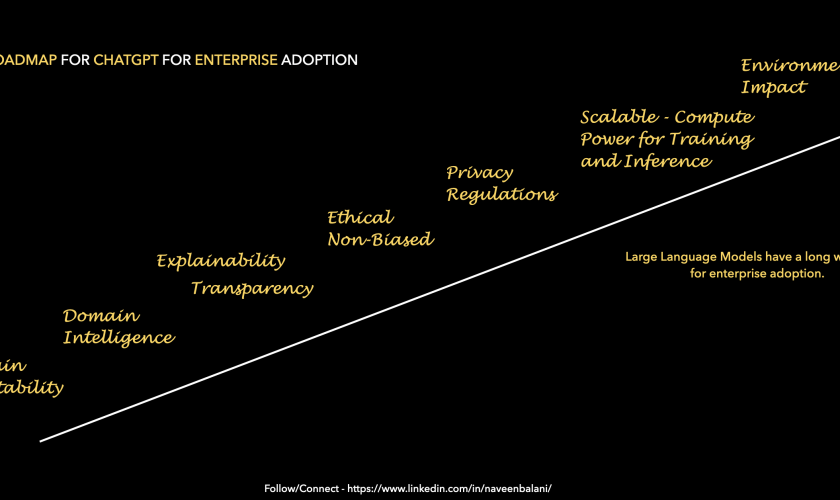

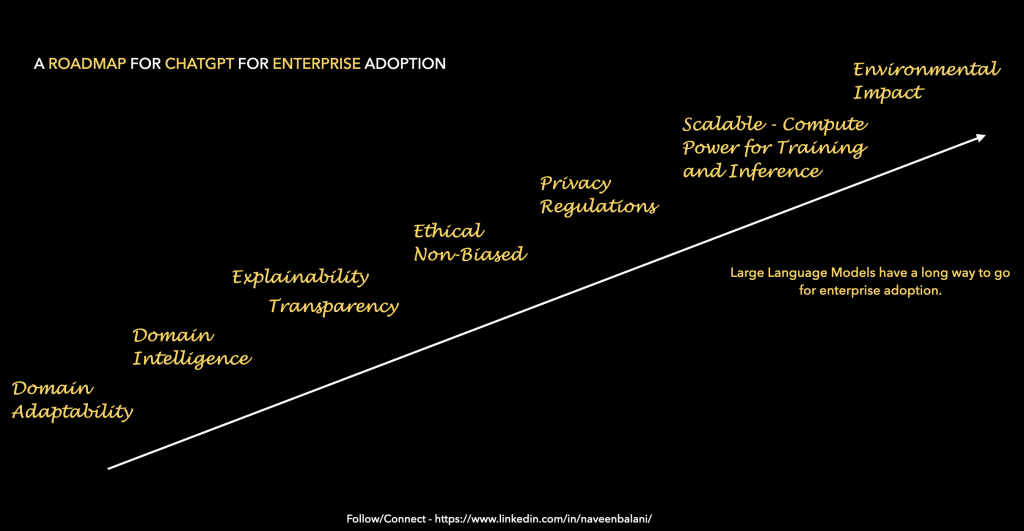

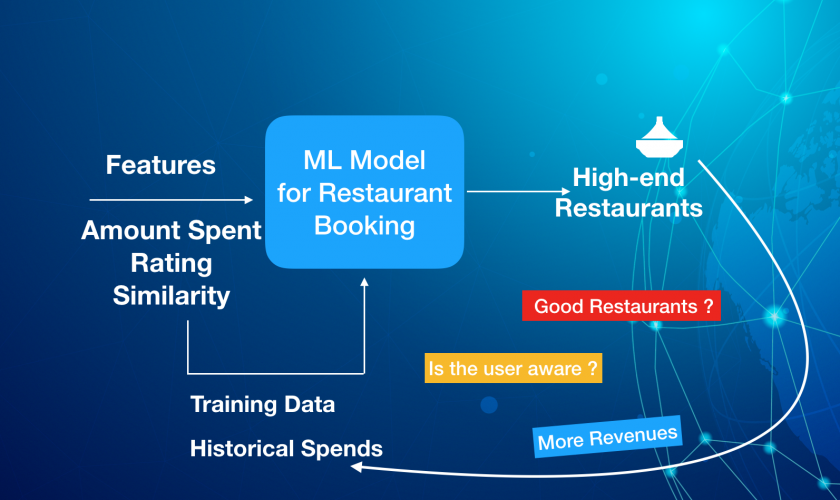

With ChatGPT, I expected that at least this information should be correct as its readily available. The sources can be from Linkedin, my website, or other credible sources. There is no logic and no complex deep learning network to apply. The biggest problem with the below response is that there is no explainability and no details on the sources that were used to construct the response. How one can verify the correctness of the response ?. The responses are created dynamically even when not required. Unless you design with explainability in mind, the issues around trust and transparency will not be resolved. There are other issues around bias, ethics, and confidentiality, which I will talk about in future posts.

Unless you know the right answer, it would be difficult to know from the above response as the answers are grammatically right and may sound right. This is a very simple scenario. Moreover, moving from general intelligence to specific verticals like healthcare (for instance, diagnosis), increases the complexity of large language models and poses significant challenges. I have discussed this in one of my previous blogs, discussing the Lack of Domain intelligence for ChatGPT (and other conversational engines).

I can’t predict the future, but I believe Google Search might be better positioned to take Generative AI and integrate it with its search engine. Hopefully, they follow their 7 AI principles – https://ai.google/principles/ before the release to make AI applications transparent, explainable, and ethical. “Slow but steady wins the race” might come true for Google.

With the AI-powered Bing search engine, some early feedback and interesting facts about long chat sessions going wrong are documented on their website – https://blogs.bing.com/search/

In my next post, I will talk about an interesting subject -“Similarity syndrome in ChatGPT”