A not long time ago, the tech world was abuzz with a futuristic concept known as the Metaverse. This interconnected universe of virtual reality spaces, where individuals could interact in a simulated environment, was hailed as the future of technology. Fast forward to the present, the hype around the Metaverse has fizzled out considerably. The technological focus has now shifted towards Generative AI, with the spotlight on Large Language Models (LLMs) like GPT-4 and Google’s Bard. But why has this shift happened, and what does it mean for the future of technology?

The Metaverse Hype and Its Fade

The Metaverse, inspired by science fiction, promised a future where people could virtually live, work, and play in a digitally created universe. The possibilities seemed endless: avatars interacting in virtual spaces, immersive gaming experiences, and a revolution in remote work and social interaction.

However, the Metaverse hype began to fade due to several challenges. Firstly, the technological infrastructure required to create a fully immersive, interconnected virtual universe was found to be more complex than initially anticipated. From achieving high-quality, real-time 3D graphics to creating an inclusive and universal user interface, the hurdles were numerous and steep.

Secondly, the economic and business models of the Metaverse remained elusive. Monetization strategies that could support the massive infrastructure while providing value to users were hard to identify and implement. Moreover, the question of who would control and govern the Metaverse raised issues of centralization versus decentralization, leading to further complications.

Finally, the sheer scale of the Metaverse presented unique challenges. Coordinating multiple platforms and technologies to work seamlessly was a considerable task. It required not just advanced technology but also extensive collaboration and standardization across industries and platforms – a feat easier said than done.

The Rise of Generative AI and LLMs

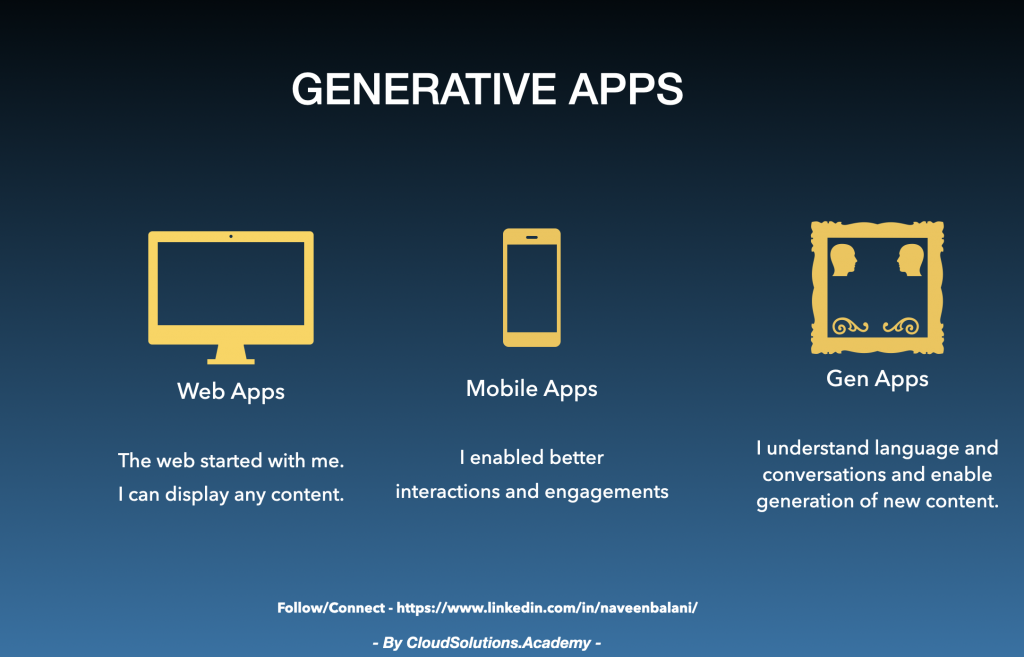

As the Metaverse hype faded, attention turned towards another transformative technology: Generative AI, specifically Large Language Models (LLMs) like GPT-4 and Google Bard. These AI models are capable of understanding and generating human-like text, making them powerful tools for a multitude of applications.

The hype around LLMs is not without reason. They can generate high-quality text for a variety of uses, from creative writing and customer service to programming and academic research. They can also help democratize access to information and educational resources, providing personalized tutoring and making knowledge more accessible.

Moreover, LLMs like GPT-4 and Google Bard have shown remarkable advancements in understanding context and generating nuanced responses, bringing us closer to the goal of creating AI that can truly understand and mimic human communication.

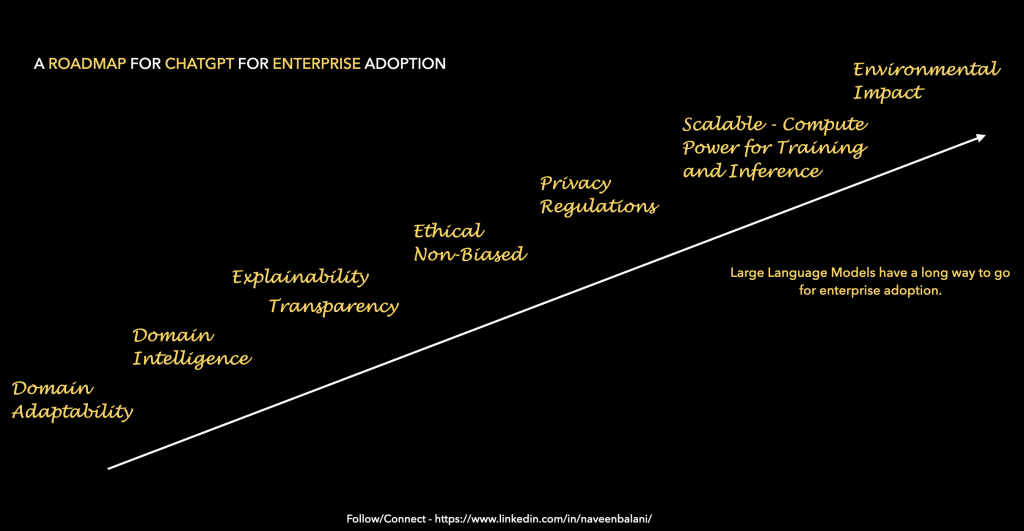

Challenges and Future Prospects of Generative AI

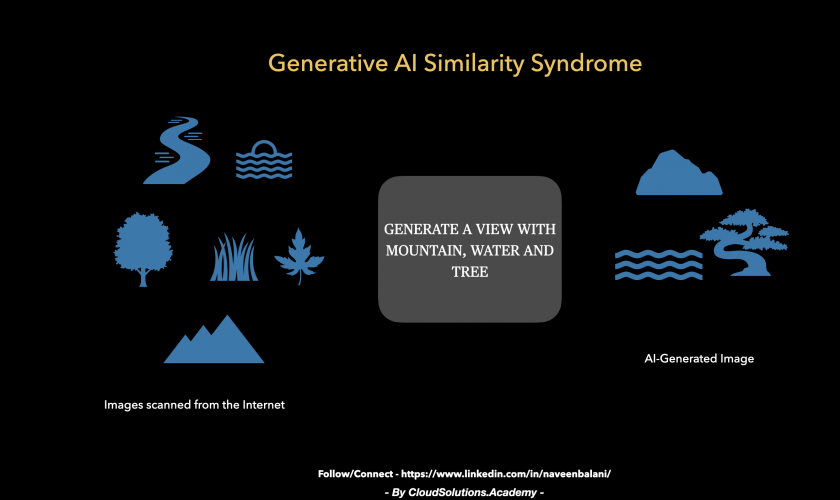

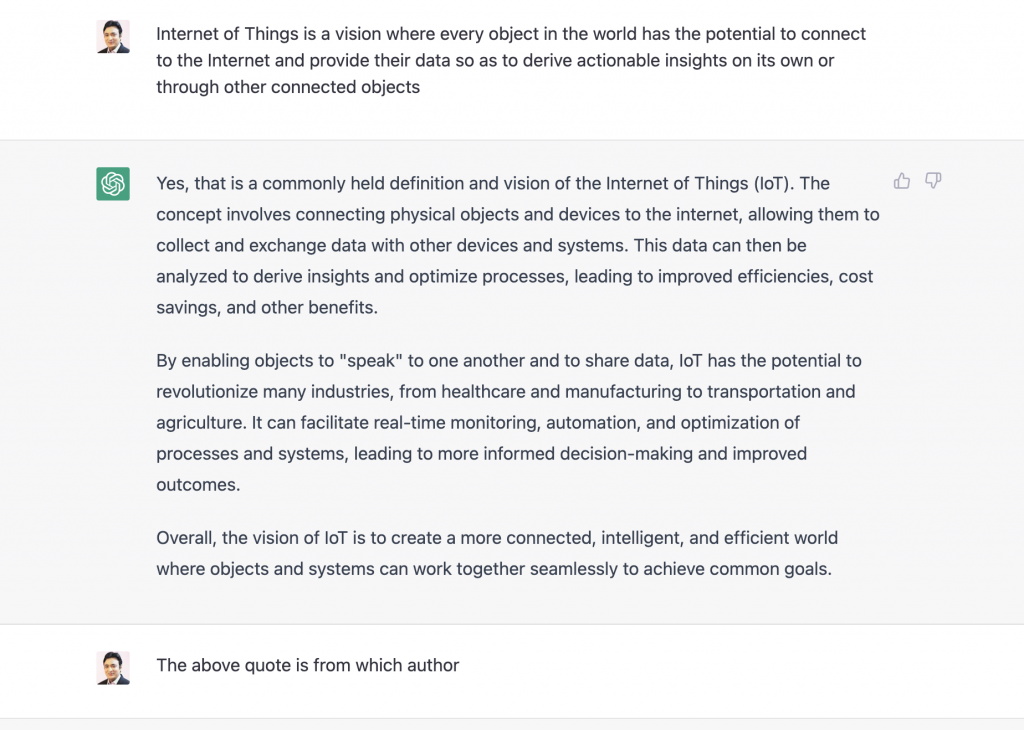

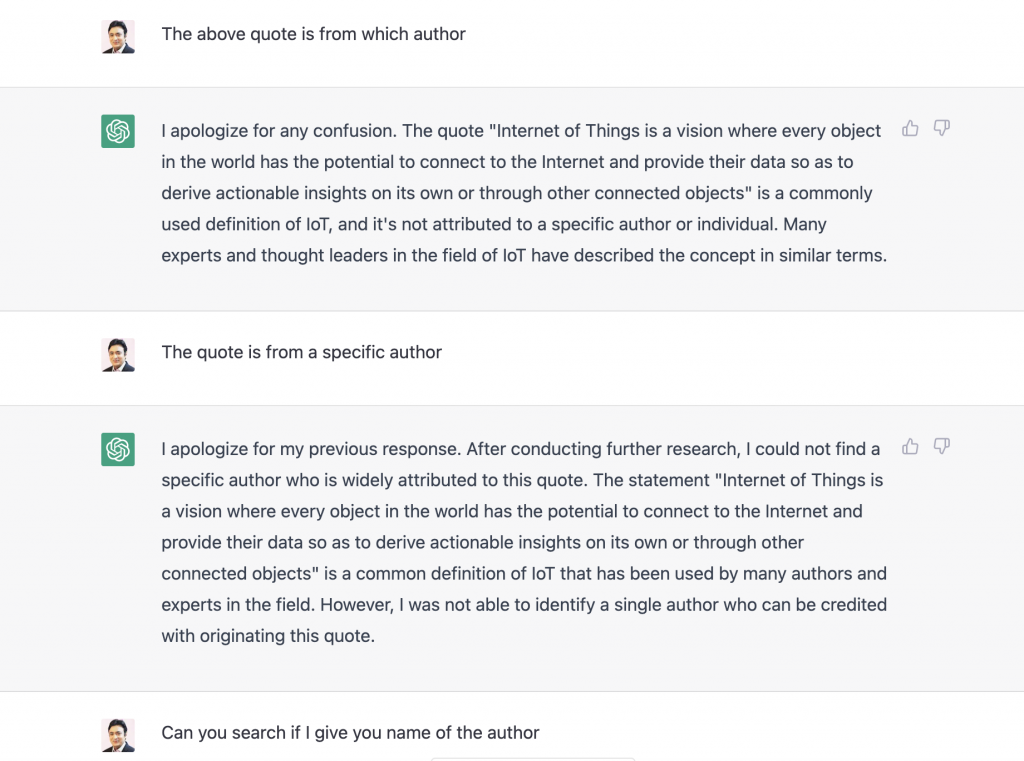

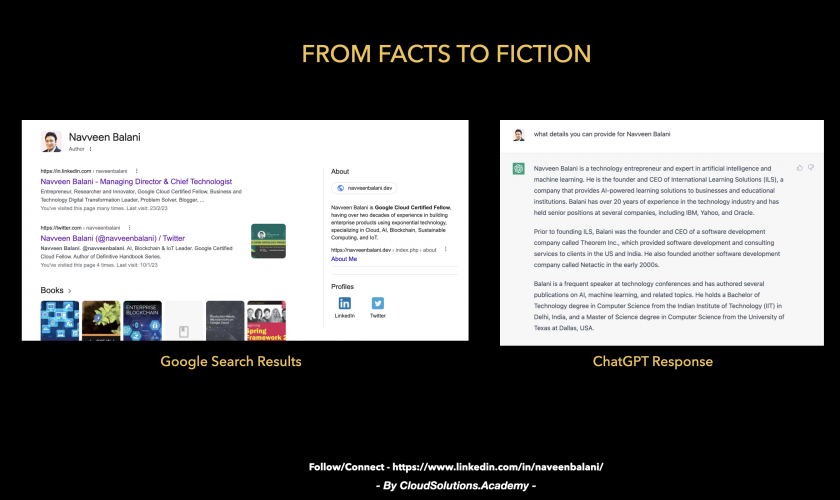

However, as with any transformative technology, Generative AI and LLMs face their own set of challenges. Ethical concerns are at the forefront. The potential for misuse of these models to spread misinformation, generate deep fake content, or automate malicious activities is a significant worry.

Further, while these models are impressive, they don’t truly understand the content they generate. They are statistical models that generate text based on patterns in the data they were trained on. This leads to potential biases in the output, reflecting the biases present in the training data.

In spite of these challenges, the hype around Generative AI and LLMs seems to be more justified compared to the Metaverse and made it accessible to the public. The technology has already shown its value in numerous applications, and with the right guidelines and ethical considerations in place, its potential benefits far outweigh the risks.

Conclusion

While the Metaverse represented an exciting vision of a virtual future, its realization proved to be more complex and fraught with issues than initially anticipated. Conversely, the rise of Generative AI and LLMs appears to be more grounded in reality, with tangible benefits and applications already visible.

However, it’s crucial not to let the hype overshadow the potential risks and challenges associated with Generative AI and LLMs. Robust regulation, ethical guidelines, and transparency in how these models are trained and used are crucial to prevent misuse and mitigate any harmful impact.

In the end, the hype around technological advancements like the Metaverse, Generative AI, and LLMs provides valuable lessons and guides us closer to our goal of leveraging technology for the betterment of humanity. It’s not the hype that determines the success of a technology, but its impact, its ability to address real-world problems, and the safeguards in place to prevent its misuse.