This article is part of IoT Architecture Series – https://navveenbalani.dev/index.php/articles/internet-of-things-architecture-components-and-stack-view/

In previous article, we went through the Azure IoT stack. In this article, we will go over building the connected car solution.

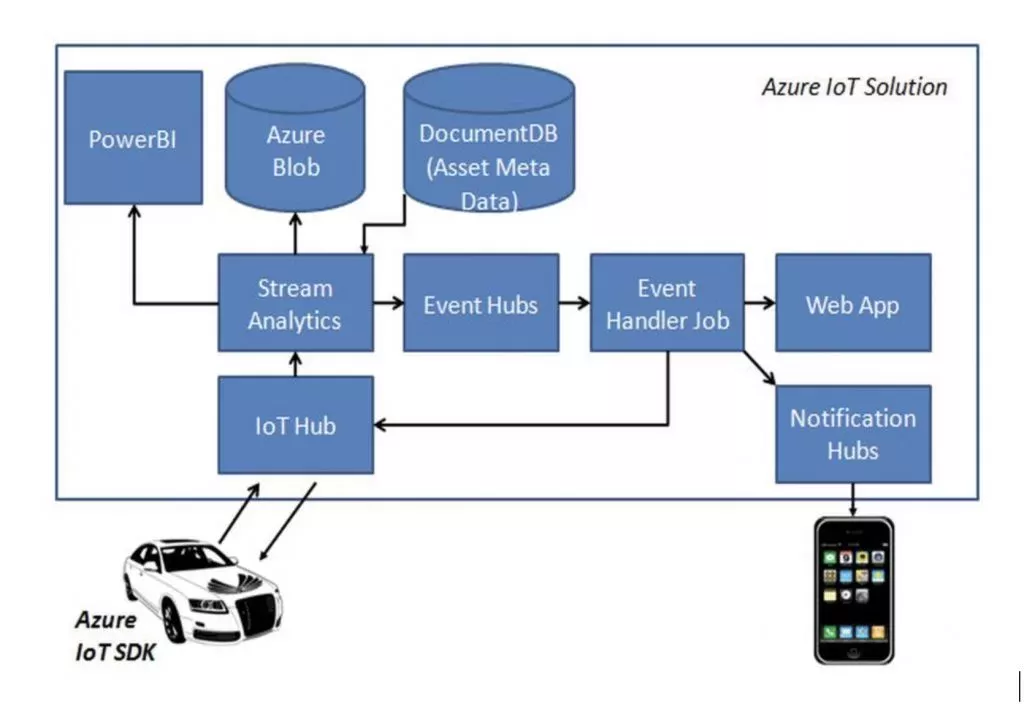

The solution strategy comprises of using the Azure IoT services we described earlier to build the connected car IoT application.

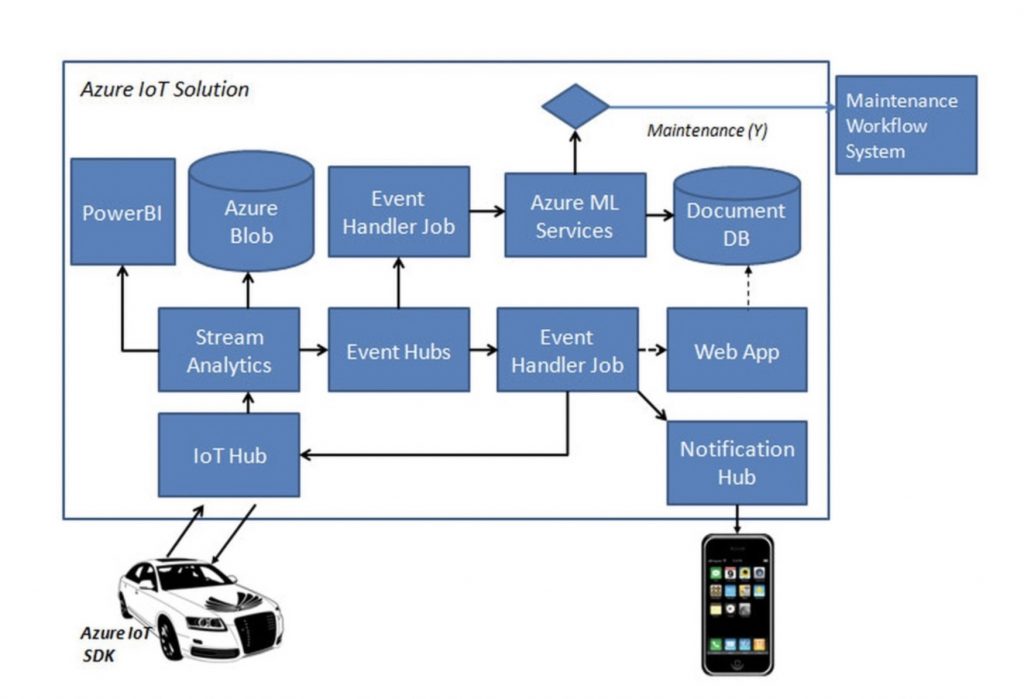

In order to receive messages on Azure platform and eventually start processing the same, there are a bunch of activities that needs to be done. Our solution uses two approaches to process the incoming data – real-time and batch analysis. The real-time approach processes the continuous stream of data arriving at IoT Hub from devices that includes taking the required action at runtime (like raising an alert, sending data back to devices or invoking a third party service for maintenance order), while batch analysis includes storing the data for further analysis and running complex analytics jobs or using existing Hadoop jobs for data analysis. The batch analysis would also be used for developing and training the machine learning models iteratively and then using these deployed models at runtime for real-time actions.

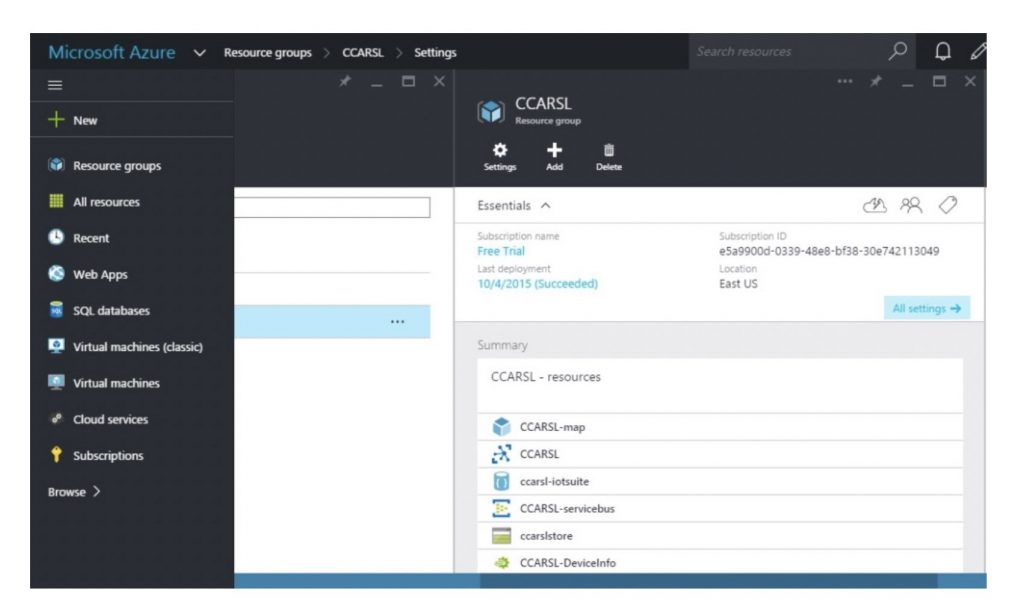

The following image shows the Azure Management portal where a set of task needs to be executed.

The following are the high-level steps that need to be performed in Azure Management Portal

- Create Resource Group

- Create an IoT Hub

- Create Device Identity

- Provision Hardware devices

- Create Storage Service

- Create Azure Stream Analytics Jobs

- Create Event Hubs

- Create PowerBI dashboards

- Create Notification Hubs

- Create Machine Learning (ML) model

We had discussed all of the above capabilities in earlier article, except the Resource Group. A Resource Group basically is a container for all resources related to a specific application, which uses the same subscription information and is hosted in the same location. We create one resource group for the connected car, and all the resources would use this resource group. We would not go over the configuration steps in detail, but summarize one execution flow for the connected car use case which uses the above resources.

Real-time Flow

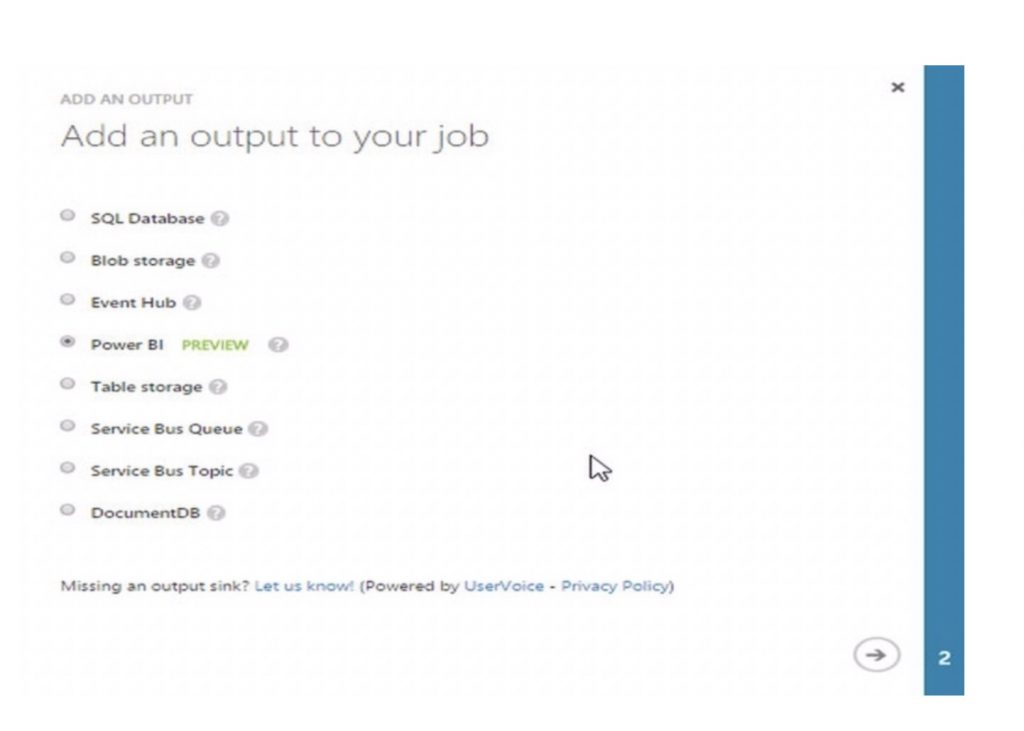

The IoT Hub receives the data from the connected car device over AMQP protocol. Once the data is received, the stream of data is consumed by Azure Stream Analytic jobs. As part of configuring the Azure Stream Analytics job, you specify the input source as IoT Hub and specify the input format (JSON) and encoding (UTF-8). This would stream all data from IoT Hub to this Azure Stream Analytics job. As part of output configuration, you specify where you want to store the output of the job, for instance, Blob Storage, Event Hubs, PowerBI, etc. The following image shows the list of output options:

The IoT Hub receives the data from the connected car device over AMQP protocol. Once the data is received, the stream of data is consumed by Azure Stream Analytic jobs. As part of configuring the Azure Stream Analytics job, you specify the input source as IoT Hub and specify the input format (JSON) and encoding (UTF-8). This would stream all data from IoT Hub to this Azure Stream Analytics job. As part of output configuration, you specify where you want to store the output of the job, for instance, Blob Storage, Event Hubs, PowerBI, etc. The following image shows the list of output options:

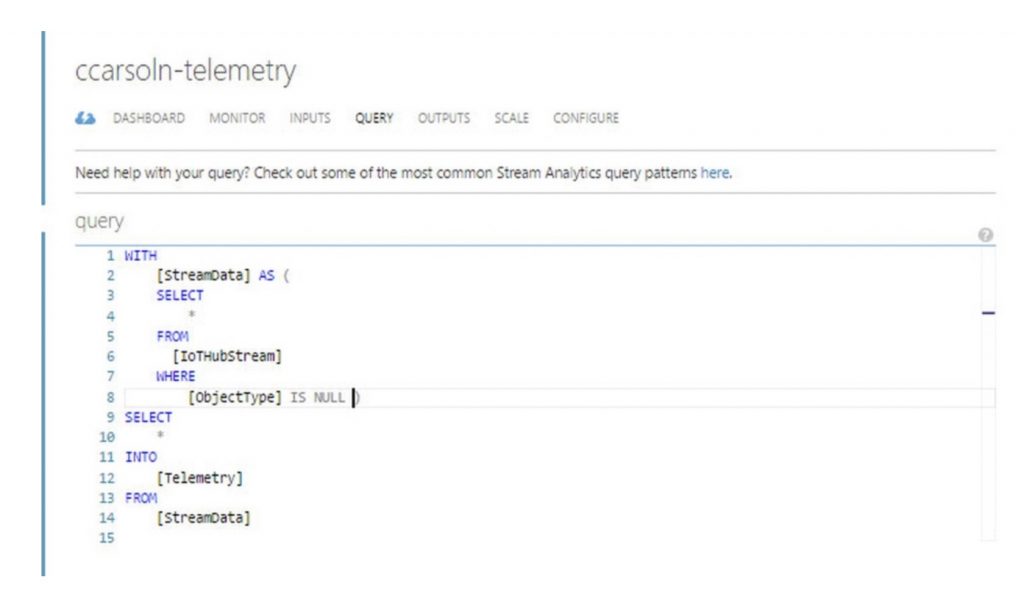

In the Query Tab on the Azure Stream Analytics job, you specify the query (SQL-like queries), which works on the input data and produces the output. The output (in JSON format) is delivered to the output channel.

For our connected car scenario, we create two Azure Stream Analytics jobs. For the first job, we specify the input as IoT Hub. The query is to select all the incoming data. There are two output configuration specified; one output dumps the data into Azure Blob for further analysis and the other dumps the data into PowerBI for creating a dashboard. The following image shows the snippet of Query view:

For the second Azure Stream Analytics Job, we create two inputs; the first input is IoT Hub, and other is the Asset DB, which contains the asset metadata. For the query, we create condition-based rules which trigger if conditions are not met (like speed >100 km/hour, low engine oil, low tire pressure). The Azure Stream Analytics rules correlates asset metadata and runtime data of the connected car to trigger conditions based on asset specifications. The asset specification contains the asset details and ideal permissible limit of the asset – be it car engine, tire pressure, engine oil, etc. This is simple condition based maintenance. The result from the rules is stored in output storage. The output is stored in an Event Hubs for further processing by various applications.

A custom Event Handler is created which acts as a consumer and picks up the data from Event Hubs and uses the Notification Hubs APIs to push high priority events to mobiles. The handler also sends updates to web dashboards and sends the message back to the IoT Hub device queue for that device using the device id. The connected car device receives the notification on the device dashboard.

Offline Process

Now, let’s discuss the offline process. The offline process is mainly used for batch processing, analyzing volumes of data, correlating data from multiple sources and complex data flows. The other scenario is developing machine learning models from these various data sets, training, and testing iteratively to build models which can predict or classify with reasonable accuracy.

Building Machine Learning Models

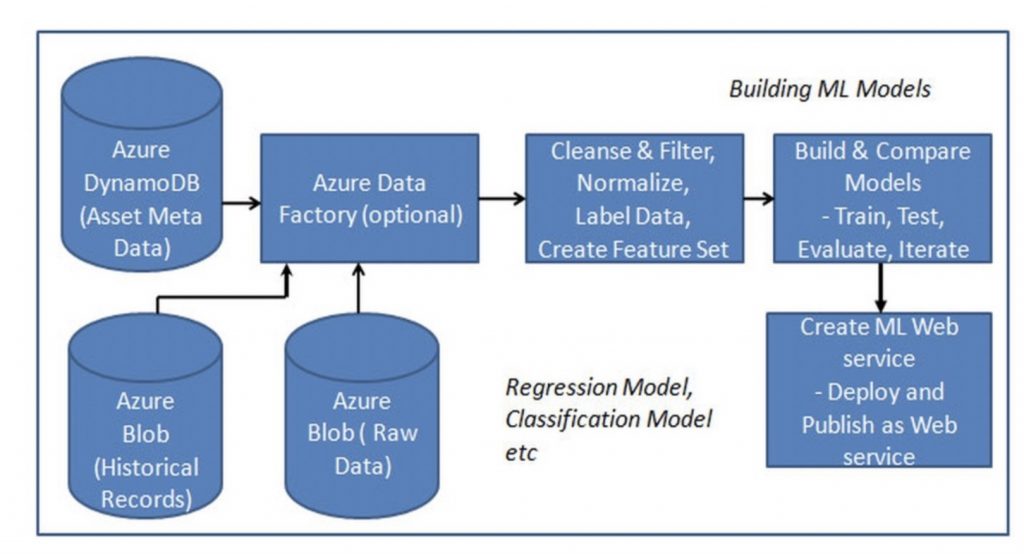

Building machine learning models is an iterative process, and it involves a bunch of tasks as depicted in the diagram below.

For the connected car solution, we would build two machine learning models – one for predictive maintenance and other for driver behavior analysis.

The following list shows high-level steps to build machine learning model using Azure ML. The steps listed below are generic and applicable to building any machine learning model.

- Select the data sources that you would need for building the model. In the connected car scenario, our data sources are Azure Blob (raw vehicle data), Azure Dynamo DB (Asset Meta Data) and a second Azure Blob which contains historical records for vehicle maintenance and driver classification. In the absence of the historical records, it is still possible to build predictive models with unsupervised learning techniques and later correlating the outputs manually and deriving insights. The entire process tends to be very complex. Most of the tools don’t support this methodology and expect you to provide labeled data (input and output). In future, data generated from the connected product would be one key valuable asset, and you would see various data providers providing such historical records (like trends) for analysis.

- The Azure Data Factory is an optional data service added to the design to transfer and analyze the raw data and create data process pipelines to make the data consumable. The Data Factory, in particular, is useful if you need to integrate with multiple systems and perform data processing to arrive at the desired output.

- The third step is about preparing the data to be used by the model. This involves cleaning and filtering the data, normalizing the data, creating labeled inputs for classification and most importantly creating relevant feature sets based on the use case requirements. Selecting a feature set and building the model is a complex exercise and requires thorough understanding and expertise on machine learning and it’s outside the scope of this book. Preparation of data is the most crucial and time-consuming step in building the model. As part of this step, you would also create train and test set. You would train the model using the train set and test your model iteratively using the test set. Azure ML provides visual composition tools to enable prepare the data. Azure ML is available over the web, and you can execute the entire end-to-end process without installing any additional software.

- Once the data is prepared, you start building the model using Azure ML by selecting the type of model (regression, classification, etc.) and algorithms associated with it and use the data from the previous step. For instance, for the regression model, you could use neural network regression, decision forest algorithms, etc. You can evaluate all the models to understand which one performs better for your data set. As mentioned this is an iterative step. For the connected car solution, we will perform predictive maintenance using regression algorithms and for behavior analysis, we use multi-class classification. The regression model output would be a confidence score that indicates whether maintenance is required for the equipment or not. For behavior analysis, the model could be classified as aggressive, neutral, etc.

- Next step is publishing the machine learning model as the web service so that it can be consumed by the application through an API call.

As mentioned earlier, the real challenge is building the machine learning model and training the model to predict a reasonable outcome. This requires a significant effort and training to get a reasonable prediction over a period of time. Azure Stream Analytics lets you combine data from multiple streams, so you could combine real-time and historical data and arrive at an outcome. For instance, you can also combine streams to detect an anomaly in real-time through machine learning models.

Currently, there are no pre-built machine learning models available for industries, and hence an offline process is required to build the model iteratively. In future, we envision machine learning models would be available as services for each industry like predictive maintenance for vehicles or specific machinery types. All then you have to do is provide the data to the machine learning models for prediction. We had discussed this concept in the earlier chapter where we had talked about Solution Template in first article.

Integrating Machine Learning Models with Real-time Flow

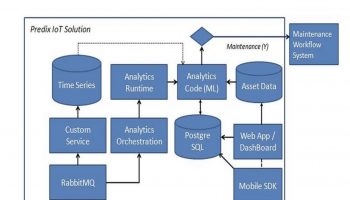

Once our machine learning model is ready, as the last step we integrate the machine learning model with the runtime flow as shown below.

We added one more event handler to the existing flow, which calls our predictive model Azure ML service through the API. Based on the response, if maintenance is required the event handler invokes an external request to maintenance workflow system to initiate a work order for repair. The integration of driver behavior analysis is pretty much the same and in this case, the output goes to mobile and the web instead of a maintenance request.

This complete the Azure IoT implementation. Next, we look at building IoT applications using IBM Cloud Stack