This article is part of IoT Architecture Series – https://navveenbalani.dev/index.php/articles/internet-of-things-architecture-components-and-stack-view/

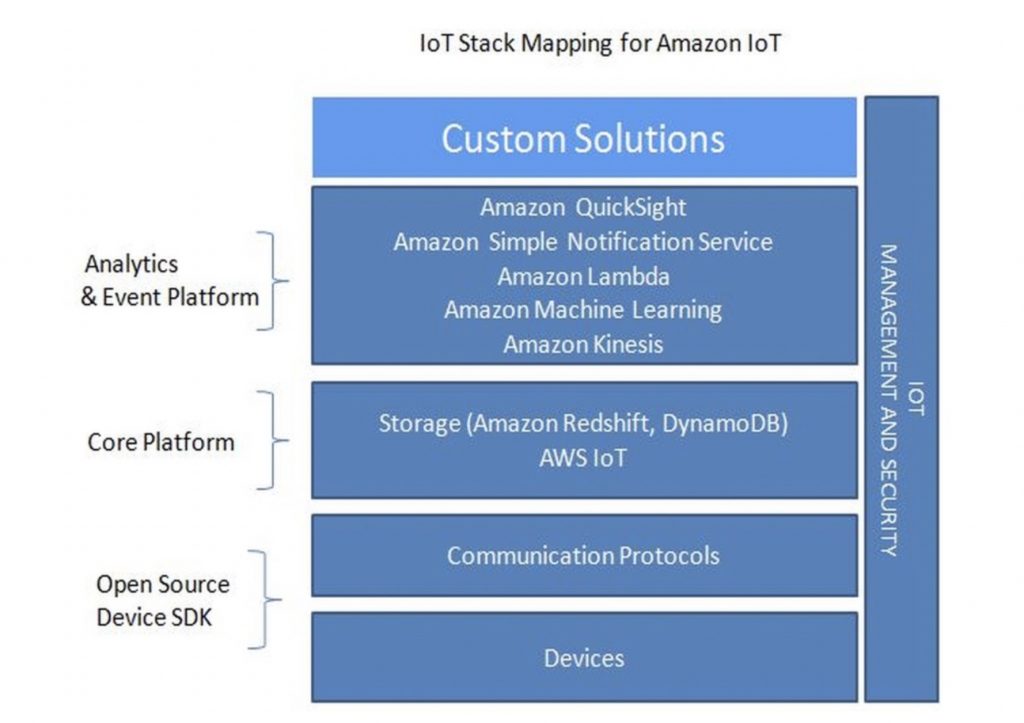

In this section, we would look at how to realize the IoT use case using Amazon Web Services (AWS). Similar to Microsoft Azure and IBM Bluemix, AWS offers a host of services to enable creating IoT applications.

Amazon (on 8th October 2015) announced the AWS IoT service at their AWS re: Invent event. AWS IoT makes it easier to build IoT applications. AWS IoT platform allows you to collect, store, analyze and take actions against large volumes of data streaming from your connected devices. This was an important update from Amazon as prior to this announcement; there were no direct IoT offering from Amazon like the IoT Hub from Microsoft or IBM Watson IoT Platform. However, this did not stop anyone from building an IoT application on AWS, as you could develop a custom application or host open source components (like Mosquito for MQTT) that provide similar functionality on AWS. With the acquisition of 21emetry (and its ThingFabric IoT platform) in March 2015, we feel they had closed this gap earlier, but there was no official announcement on how ThingFabric capabilities would be used in the context of AWS. We assume they leveraged the ThingFabric platform (MQTT and HTTP support) and the rules integration from ThingFabric, which allows connecting to various AWS services. Please note this is purely our viewpoint, and there is no official confirmation on how ThingFabric platform is leveraged (if at all) internally with the Amazon IoT offering.

Let’s analyze all the components –

AWS IoT Device SDK

AWS IoT Device SDK simplifies the process of connecting devices securely to the AWS IoT platform over MQTT protocol. The device SDKs currently supports C SDK for Linux, libraries for Arduino platform and Node.JS libraries for embedded platforms like BeagleBone, Raspberry Pi, etc.

All bi-directional communication between devices and AWS IoT platform is encrypted through Transport Layer Security (TLS) protocol. We would talk about the security aspects in the later section. The device SDK also allows an option of using third party cryptographic libraries and TLS 1.2 certificates for connecting devices to the AWS IoT platform securely.

Tip – The AWS IoT Starter Kits provides everything you need in a box to get started with Amazon IoT platform. Just plug and play the device and it is connected to AWS IoT platform. You can purchase the kits from Amazon.com.

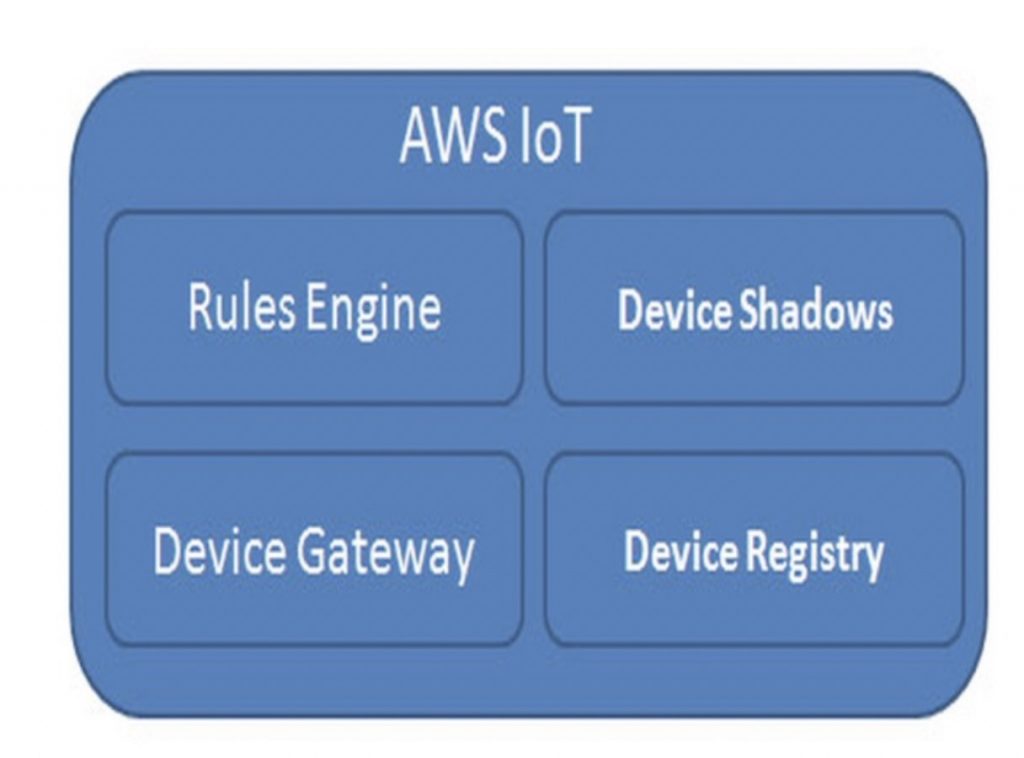

AWS IoT

AWS IoT is a highly scalable and managed cloud platform that allows devices to securely connect and interact with AWS services and other devices over standardized MQTT and HTTP protocol. AWS IoT also allows applications to interact with devices even when they are offline by storing their latest state and later syncing the state back to actual devices when they are connected back.

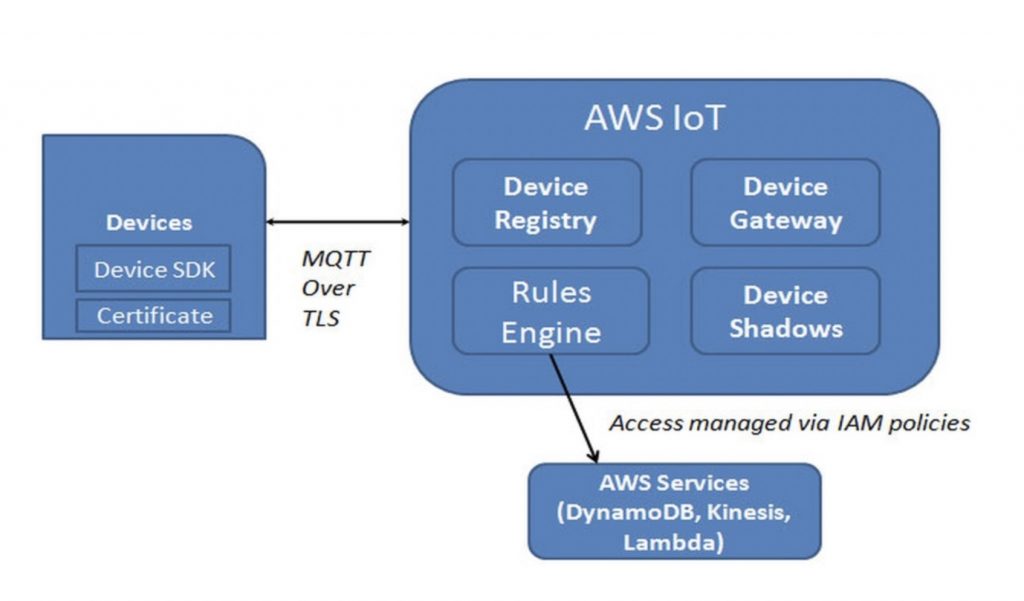

The AWS IoT platform consists of a core set of capabilities as shown in the figure below. This includes a device gateway, device registry, rules engine and device shadows component.

Let’s understand each of the components –

- Device Gateway: The AWS IoT Device Gateway is a highly scalable managed service that enables devices to communicate securely with AWS IoT. The Device Gateway supports publish-subscribe model and provides support for MQTT, WebSocket and HTTP 1.1 protocols. We had discussed the publish-subscribe pattern earlier in our Chapter 1. The main advantage of using this pattern is that it decouples sender and consumer thereby allowing new devices to get connected and start receiving the messages by subscribing to relevant topics.

- Device Registry: Device Registry stores the metadata about the devices and acts as identity store of devices. Each device is assigned a unique identity by Device registry during registration. We had described the typical features of device registry earlier in Chapter 1.

- Device Shadows: Device Shadows is a unique capability provided as part of the AWS IoT platform that creates a virtual device or shadow of the device that contains its latest state. The application can communicate with shadow/virtual device through the APIs even if the actual physical device is offline. When the actual device gets connected, AWS IoT automatically synchronizes the state and pushes changes to the actual device based on changes that were done on the virtual device.

- Rules Engine: Rules Engine is a highly scalable engine that transforms and route incoming messages from devices to AWS services based on business rules you have authored. Rules are authored using SQL-like syntax and query is made against a MQTT Topic to extract the required data from the incoming message. The incoming message needs to be in JSON format. Here is a sample query

SELECT *FROM ‘iot/ccar-ad/#’WHERE speed >120

In above query, ‘’iot/ccar-ad/#’ is the topic where devices publish the following JSON message. In this case the rule would be evaluated to true as speed is 140 km/hour.

{

"deviceid":"audi22",

"speed":140,

"gps":{

"latitude":...,

"longitude":...

}

"tyre1":30,

.....

}

AWS IoT also provides various options for secure communication between devices and the AWS IoT platform. Understanding the end to end security strategy for your IoT application is a key step, as it includes devices, AWS IoT platform, and rest of AWS services being used as part of the IoT application. Let’s talk about it in detail in the next section.

Device Security, Authorization, and Authentication

Let’s understand how devices can securely connect to AWS IoT platform and process of authorizing devices. AWS IoT platform provides secure, bi-directional communication between devices and the AWS cloud. Devices connect using a choice of identity through one of the three options – Digital certificates (X.509 certificate), AWS authentication through user and passwords or leveraging their own identity provider or third party providers like Google, Facebook using Amazon Cognito. Based on identity choice, you choose which application protocol to be used for communication and how you want to manage identities. For instance, you would typically choose X.509 certificate with MQTT protocol as it would allow bi-directional communication between devices and AWS IoT platform to be encrypted. Amazon Cognito is used if you have already invested in third-party identity management and want to leverage it for IoT applications and HTTP protocol for managing identities using AWS Identity and Access Management (IAM) service. We would not recommend using HTTP protocol for devices unless a MQTT library doesn’t exist for that device.

Note – TLS requires digital certificates for authentication. AWS supports X.509 certificates that enable asymmetric keys to be used with devices. AWS IoT command line interface (CLI) makes it easier to create, manage and deploy X.509 digital certificates.

Once a device is authenticated, authorization is handled through policies. Policies let you execute device operations (connect, publish, subscribe, receive) based on the permissions defined. You create policies based on your identity choices, for instance, you create AWS IoT policies and attach it to X.509 certificate and Amazon Cognito, while for IAM user management, you create IAM policies through the IAM console. Once authorized, the specific operations can be performed. This completes the device communication with the AWS IoT platform. Now your IoT application would like to invoke other AWS services, like persisting the device data from topic to DynamoDB or processing large volumes of data streams in real-time through Amazon Kinesis stream instance. This is handled through the rules instance as discussed earlier. In order to access the particular Amazon Kinesis stream instance, you need to have a policy defined in IAM, which is used by the rule instance to allow AWS IoT to access the Amazon Kinesis stream instance securely. This ensures an end-to-end secure connectivity between devices and Amazon IoT platform and from Amazon IoT platform to rest of AWS services.

The following diagram shows an execution flow for a secured MQTT connectivity between devices and AWS IoT platform.

To summarize an execution flow for a secured MQTT connectivity, the onus is on you to assign unique identities to each device through Device Registry, create and manage certificates on the devices and connect to Amazon IoT over TLS protocol using these certificates. You can use Amazon Device SDK, which provides libraries for TLS based authentication. Once devices establish a connection, the Amazon IoT platform, in turn, is responsible for authenticating your devices based on the client X.509 certificate, validating it based on public keys. Once authenticated, authorization of operations happens based on the policies defined in the certificate and valid operations are executed. For instance, a device may only be able to read messages from a topic and not publish on the topic. As discussed earlier, to interact with other AWS services, you author rules and define how you want to route the messages. For instance, you can route the messages to Amazon Kinesis for processing large volumes of data streams in real-time. In order to access the particular Amazon Kinesis stream instance, you need to have a policy (roles and permissions) defined in IAM, and your rule instance uses the policy to allow AWS IoT to access the Amazon Kinesis stream instance securely.

Storage

AWS provides various options for storage – Amazon DynamoDB (NoSQL Database), Amazon Redshift or Amazon Relation Database service. For IoT applications, the initial choice is to use Amazon DynamoDB. Amazon DynamoDB is a highly scalable, high-performance NoSQL database with single-digit millisecond latency at any scale. It is a fully managed database service and provides first class support to trigger events based on data modifications in tables via Amazon Lambda service. We would talk about Amazon Lambda service in a later section.

Amazon Redshift is a fast, fully managed, petabyte-scale data warehouse that delivers fast query by parallelizing queries across nodes to analyze massive volumes of data. Depending on the volume of data that needs to be analyzed over a period of time and complexity of analytics queries, you can move over to Amazon Redshift or import the data from DynamoDB to Redshift. You need to have the right schema design with Amazon Redshift as it would impact querying, indexing, and performance of your queries. For instance, if you need to find out the average power consumption of a connected city based on each location at runtime, you are dealing with at least millions of records. In such scenario, Amazon Redshift may be an ideal choice. Also, not all device data needs to be treated equally and stored in Redshift, but only a set of key actionable data sets, streaming from millions of connected devices, which are used to derive actionable insights by running complex analytics queries on it.

From an integration perspective, you can directly store the data from devices into DynamoDB using Amazon IoT rules engine. You need to author rules in Amazon IoT to trigger on specified MQTT topic, select the message and also provide the IAM role which has access to DynamoDB service and can perform required operations (like insert) on DynamoDB. The following shows an example of a rule which dumps all device data from topic ‘ccar’ into DynamoDB table ‘ccardb’:

{

"SQL":"SELECT * FROM 'topic/ccar'",

"ruleDisabled":false,

"actions":[{

"dynamoDB":{

"tableName":"ccardb",

"hashKeyField":"key",

"hashKeyValue":'${topic(3)}',

"rangeKeyField":"timestamp",

"rangeKeyValue":'${timestamp()}',

"roleArn":"arn:aws:iam::1xxx/iot-actions-role"

}

}]

}

The above assumes you have created a DynamoDB table with the name as ccardb. As part of table name creation, you specify the primary key attributes as Hash and Range or Hash. Choosing the right Hash and Range is a key consideration, as this would impact how the data is indexed and retrieved. In the above case, we choose topic name and timestamp as hash and range values.

We talked about the time series domain earlier; you can design the DynamoDB schema in a way that it can handle time series operations effectively.

To store the incoming data into Amazon Redshift, you can use Amazon Kinesis Firehose. We will talk about Amazon Kinesis Firehose in the next section. You specify the Amazon Kinesis stream instance name and IAM role for access. The stream is configured to dump the data into Amazon Redshift.

Following shows an example:

{

"sql":"SELECT * FROM 'topic/ccar'",

"ruleDisabled":false,

"actions":[{

" firehose":{

"roleArn":" arn:aws:iam::2xxx/iot-actions-role ",

"deliveryStreamName":"ccarfst"

}

}]

}

Amazon Kinesis

Amazon Kinesis platform provides real-time data processing, enabling applications to capture a continuous stream of data from devices and other sources, analyze it at runtime to generate real-time dashboards or trigger required action. Amazon Kinesis platform consists of three services:

- Amazon Kinesis Firehose: Amazon Kinesis Firehose lets you capture streaming data and store the data directly into Amazon S3 and Amazon Redshift and make it available in near real-time for reporting and analysis.

- Amazon Kinesis Streams: Amazon Kinesis stream lets you build custom real-time highly scalable streaming applications based on your requirements, such as real-time optimizations from multi-stage process flows. Let’s take an example of a connected airport and one of the use cases is around providing fastest journey time for passengers throughout the airport. The process would involve streaming data from multiple connected sources and points of interest, like check-in counter, security gates, existing passenger movements, baggage handling and services, immigrations, etc. and come up with a specialized set of algorithms that could correlate the data at real-time and predict passenger journey time and then optimize it.

- Amazon Kinesis Analytics: Amazon Kinesis Analytics service (currently not released at the time of writing this book) will make it easier to extract and analyze data using SQL-like queries and send the output of queries to specified services (like AWS Lambda) to create alerts and take correction action in real-time. Prior to Amazon Kinesis Analytics service, you need to create your own custom AWS Lambda functions to extract the data. The incoming data is usually in JSON format, and your custom functions would use the JSON APIs to extract the data and execute custom rules and take action based on the outcome.

You can directly stream the data from devices into Amazon Kinesis Firehose or Amazon Kinesis Streams using Amazon IoT rules engine.

You need to author rules to trigger on specified MQTT topic, select the message and also provide the IAM role which has access the Kinesis instance. The following is configuration that needs to be set for accessing the Kinesis streaming instance:

"kinesis":{

"roleArn":"string",//IAM Role

"streamName":"string",// Kinesis stream instance name

"partitionKey":"string"// Kinesis stream partition key

},

Following is the configuration for Amazon Kinesis Firehose:

"firehose":{

"roleArn":"string",//IAM Role

"deliveryStreamName":"string"// Stream name

}

You can also use Amazon Kinesis Client Library to build required applications (like real-time dashboards) which can consume the stream data in real-time or emit data to other AWS services like Amazon Lambda for event processing.

Note – Amazon Kinesis Aggregators is a Java framework that enables the automatic creation of real-time aggregated time series data from Amazon Kinesis streams. The project is available at https://github.com/awslabs/amazon-kinesis-aggregators and provides a good start on how to provide time series analysis on the incoming data.

Amazon ML

AWS provides Amazon Machine Learning service, which provides end-to-end support, and tooling for creating, training and fine-tuning machine learning models directly from supported data sources (Amazon Redshift, Amazon S3 or Amazon RDS), along with deployment and monitoring. The models can be executed through APIs as part of your process to obtain predictions for your applications.

Amazon ML provides interactive visual tools to create, evaluate, and deploy machine learning models. Amazon ML also provides the implementation of common data transformations, which is extremely helpful to prepare the data for evaluation.

Amazon Machine Learning service is based on the same proven, highly scalable, ML technology used by Amazon to perform critical functions like supply chain management and fraudulent transaction identification. You could see various machine learning algorithms (like classification, recommendation, etc.) in action on Amazon website.

AWS Lambda

AWS Lambda allows you to run your custom code in AWS cloud. You need to supply the code as Node.js (or Java) function and create a deployment package with the required dependency. As mentioned earlier, AWS Lambda functions can be executed asynchronously based on events from other AWS services like Amazon Kinesis. You can specify this mapping from AWS Lambda console, to associate your Lambda function with Amazon Kinesis source name. AWS Lambda functions are a great way to invoke external services (like invoking a REST call for workflow generation in a SAP system), sending alerts and notifications on mobiles through Amazon Simple Notification Service or any custom code that needs to be executed based on the desired condition.

Apart from the above services, AWS provides bunch of other services that can be used as part of the IoT applications like – Amazon ElastiCache for in-memory cache for storing asset metadata or using AWS CloudHSM service providing hardware appliance based secured key storage for handling sensitive data on cloud, for instance dealing with data from health care devices as per regulatory compliance. You can find all services at the products page at https://aws.amazon.com/products/

Next, we will look at how to implement the connect car solution using Amazon IoT platform