In the digital age, the allure of Generative A.I. for enterprises is undeniable. It promises to revolutionize industries, offering unparalleled innovation and efficiency. Yet, with this immense power comes an equally significant challenge: the risk of hallucinations and the potential for misinformation. Drawing parallels with the trust crises faced by both the financial sector during the 2008 crisis and the challenges of online shopping platforms with counterfeit products and misleading reviews, this blog post delves deep into the strategies businesses can employ to harness the potential of Generative A.I responsibly.

Understanding the Landscape

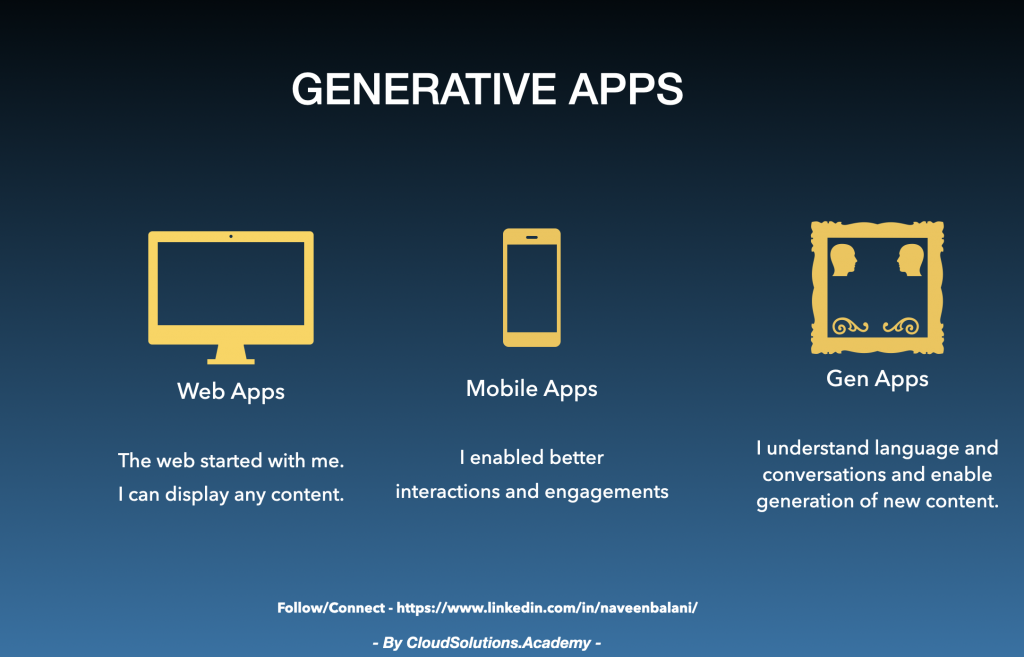

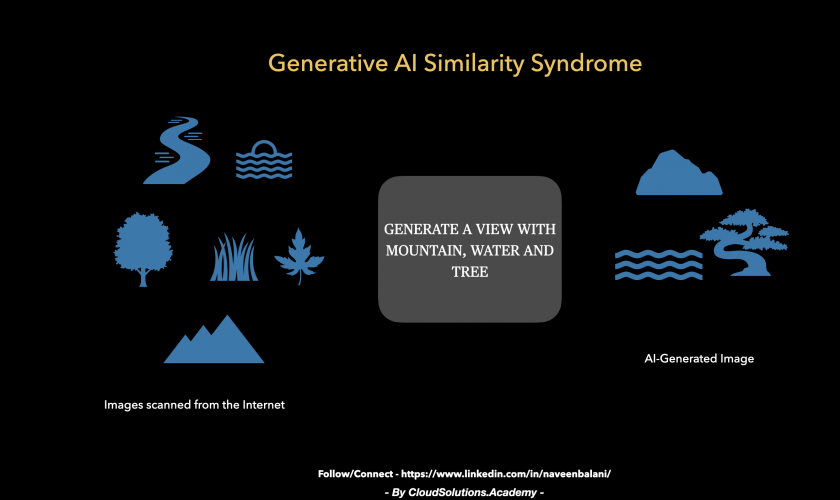

Generative A.I., with its ability to produce vast amounts of content, from text to images and beyond, stands as a testament to the advancements in artificial intelligence. However, this strength can sometimes be its downfall. Hallucinations, or the generation of misleading or incorrect information, pose a significant challenge. When Generative A.I. gets it right, the results can be nothing short of magical. But when it goes awry, the outputs can be misleading, or even harmful.

To understand the potential pitfalls of unchecked Generative A.I., let’s first look at the 2008 financial crisis. Complex financial instruments, like mortgage-backed securities and their lack of transparency, led to a global economic meltdown. Investors and the public at large were left in the dark about the true nature and risk of these instruments.

Similarly, as e-commerce platforms grew in popularity, they also became a breeding ground for counterfeit products and fake reviews. Shoppers were often misled by these fraudulent listings, leading to mistrust and skepticism towards even genuine sellers.

Furthermore, the rise of deep fakes in the realm of Generative A.I. has added another layer of complexity. These hyper-realistic but entirely fake content pieces, be it video, audio, or images, can deceive viewers, leading to misinformation, identity theft, and other malicious activities.

Given these challenges, it becomes evident that it’s crucial to address these issues in Generative A.I. as part of the initial design, rather than as an afterthought. Waiting until the end or post-deployment to address these challenges can lead to significant trust issues, reminiscent of the crises faced by the financial and e-commerce sectors.

Building Beyond Hallucinations

To harness the potential of Generative A.I. while minimizing the risks, a structured approach is crucial.

Start with Robust Training Data: The foundation of any reliable Generative A.I. system lies in its training data. It’s essential to ensure that the dataset used is both diverse and comprehensive. The quality and breadth of the input data play a pivotal role in determining the quality of the output.

Incorporate Feedback Mechanisms: No system is perfect, and Generative A.I. is no exception. By allowing users to report inaccuracies or misleading information, businesses can continuously refine their models. This not only aids in improving the system but also plays a crucial role in building trust with users.

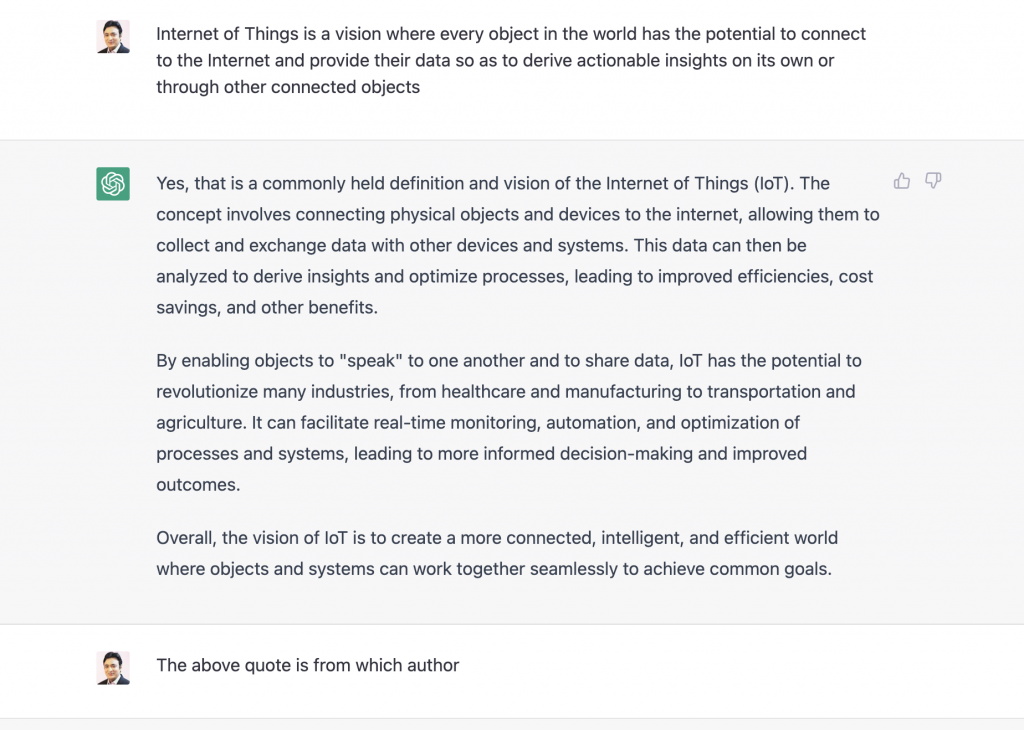

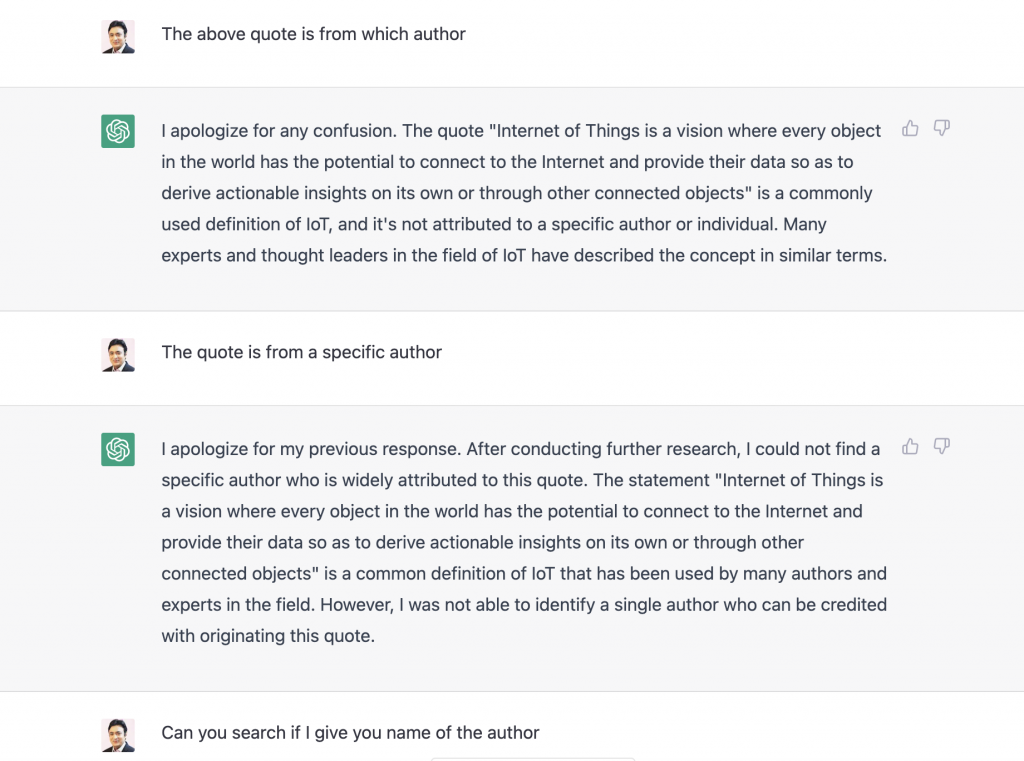

Prompt Engineering for Hallucination Mitigation: Properly designed prompts can guide the A.I. to produce more accurate and relevant outputs. By refining the way we ask questions or provide instructions to the A.I., we can significantly reduce the chances of it producing hallucinated or off-target content.

Foundational Principles in A.I.: This involves embedding core principles and guidelines directly into the A.I.’s architecture. By ensuring that the A.I. operates within predefined ethical and factual boundaries, businesses can further mitigate the risks of misinformation and unethical outputs.

Maintain Transparency: In a world where the lines between human-generated and A.I.-generated content are increasingly blurred, transparency is paramount. Users have a right to know the source of their information. By clearly labeling content generated by A.I., businesses can uphold this right and foster an environment of trust.

Instituting Governance

As with any powerful tool, the ethical and responsible use of Generative A.I. is of utmost importance.

Establish Clear Usage Guidelines: Especially in sensitive areas like news generation or medical advice, it’s crucial to set boundaries. By establishing clear guidelines on the use and scope of Generative A.I., businesses can prevent potential misuse and the spread of misinformation.

Implement Human Oversight: While A.I. has come a long way, the human touch remains irreplaceable. By introducing a system where critical outputs are reviewed by human experts, businesses can ensure the accuracy and relevance of the generated content.

Conduct Regular Audits: The world is ever-evolving, and so is the information within it. By periodically assessing the outputs of Generative A.I., businesses can detect potential issues early on and rectify them before they escalate.

Prioritize Ethical Considerations: Beyond the technical aspects, it’s essential to reflect on the moral implications of generative content. It’s not just about what A.I. can generate, but what it should generate. By keeping ethical considerations at the forefront, businesses can ensure that their use of Generative A.I. aligns with societal values and norms.

Emphasizing Design

The design of Generative A.I. applications plays a pivotal role in ensuring they are both user-friendly and trustworthy.

Adopt a User-Centric Design: At the heart of any application should be its users. By designing Generative A.I. systems with the end-user in mind, businesses can ensure a seamless and intuitive experience. This includes easy-to-use feedback systems and clear labeling of A.I.-generated content.

Privacy and Security Design: As Generative A.I. systems often deal with vast amounts of data, ensuring the privacy and security of this data is paramount. Implementing robust encryption methods, secure data storage solutions, and strict access controls can help protect user data and maintain trust.

Acknowledge System Limitations: Every system, no matter how advanced, has its limitations. By clearly communicating these to users, businesses can ensure that users have a well-rounded understanding of the generated content’s context and potential limitations.

Iterative Design for Continuous Improvement: Generative A.I. systems should be designed to evolve. By adopting an iterative design approach, businesses can continuously refine and improve their systems based on user feedback and changing requirements.

In conclusion, the promise of Generative A.I. for enterprises is vast and exciting. However, it’s imperative to navigate its challenges with foresight and responsibility. By understanding the broader landscape, building with precision, instituting robust governance, and emphasizing thoughtful design, businesses can unlock the boundless potential of A.I. This not only ensures innovation and efficiency but also safeguards the trust and integrity that users and stakeholders expect in today’s digital age.